2012 – The Most Important Year Yet in Technology

John Mauldin

September 18, 2012

As you’ll recall, I spent much of last week with the folks at Casey Research, at their most recent investment summit. They always put on a top-notch show, and there were many impressive speakers. But, as a big fan of technology, both for its place in the future of our economy and for the sheer coolness factor of some of the amazing things we can now achieve, I really liked one talk in particular.

As you’ll recall, I spent much of last week with the folks at Casey Research, at their most recent investment summit. They always put on a top-notch show, and there were many impressive speakers. But, as a big fan of technology, both for its place in the future of our economy and for the sheer coolness factor of some of the amazing things we can now achieve, I really liked one talk in particular.

Alex Daley, editor of Casey Extraordinary Technology (a newsletter I look forward to reading every month), gave an excellent talk on some of the amazing under-the-radar shifts in technology that have taken place just so far this year. While I watch the latest technology developments with some zeal, it is easy to put the potential of a technology well ahead of its practical use – a mistake that can be costly for investors. Alex has proven himself quite adept at calibrating the “application curve,” and I thought we could all learn from what he had to say, so I asked him to upload a copy of his talk to the web for you to see.

You can watch the presentation here:

http://www.caseyresearch.com/articles/alex-daley

or, if you prefer to read a transcript, Alex has written up his notes, which are below.

If you like what Alex has to say, I would encourage you to take his excellent investment advisory service for a spin. I think you’ll find his regular commentary (and stock picks) as insightful as his speech. Plus, he’s very forward about his track record, an uncensored version of which appears in the back of every issue. You can give it a look here: http://www.caseyresearch.com/cm/curing-cancer.

And I’ll also suggest that if you like this forward-looking piece you might also want to check out this op-ed by Andy Kessler in the Wall Street Journal. I spent a long evening with Andy last week in Palo Alto. He’s one of my favorite authors, whose first claim to fame was turning $100 million into a billion in tech stocks and closing it down at the top. Way cool. Since then he thinks and writes and plays with his boys. But he gets the world in a way few do.

Your thinking about the future again analyst,

John Mauldin, Editor

Outside the Box

subscribers@mauldineconomics.com

2012 – The Most Important Year Yet in Technology

By Alex Daley

Many of us fondly remember the announcement that rang with the turning of the millennium: “the first map of the human genome is complete!” It was a momentus achievement to be sure, even if it did take 13 years and cost about $3 billion. That’s because none of that mattered. It was going to rush in the age of the genetic medicine, and chronic disease would be a thing of the past. Even aging could be reversed.

So, what happened to all the promise? you may wonder. Well, it’s still there. But the problem is, science fiction authors and technology magazine scribes love to announce the arrival of the future as soon as the first scientific discoveries hint at its possibilities. That creates irrational expectations, because the progress of science – from an experimenter’s vision through to technology that can be widely distributed in a commercially viable way – is not instant. Of course, industry insiders know this, and analysts like Gartner even have entire reports dedicated to the “hype cycle” concept. But, that does not stop the average journalist from prognosticating about the possibilities.

Nevertheless, progress does march forward steadily, in the background. And, when it finally breaks through from the lab to the market… boom! You have an iPhone, a flat screen television, a multi-billion dollar blockbuster drug. The last few years have brought some incredible changes, for sure. But, the big promises – genetic medicine among them – might still seem unfilled to many. But, change can come in bunches, making certain years stand out as watersheds in technology. And, 2012 might surprise you as one of those.

Consider just a few of the most vaunted areas of technology to not quite fulfill their promise just yet:

A Future Without Paper (and Books and CDs and DVDs)

It’s long been the goal of many a business to go “paperless.” To scrap the mounds of pulp and ink that once lined hallways and storage rooms of offices around the world, we pushed the limit on storage, bandwidth and our ability to digitize nearly any piece of information.

Unfortunately, the consumer always trailed a little behind business in this regard. Our lives are replete with stuff. Things. Trinkets. Junk. There is likely some Neolithic impulse driving our desire to line the walls of our homes with the things we’ve collected from our travels, whether to Tibet or the local shopping mall.

And, for a great long time a large amount of that stuff has been media. Books. Records come CDs. Libraries of VHS or DVD movies. Recorded media has been a big business since the dawn of the mass manufacturing revolution.

Yet, at the turn of the millennium following the release of countless MP3 players we were told, like the paperless office, that the end of physical media was upon us,. The recording industry would never survive the era of free digital downloads. The first shots were fired in the late 1990s with the dawn of Napster and the mass swapping (cough, stealing) of songs across college and corporate campuses, and the Internet. Music was going digital and it was never going back.

But, by the end of 2001, following the release of Apple’s iPod, only 1% – a single lone digit – of the sales of recorded music was digital. For years, pundits decried the fall of the record, yet five plus years later the aisles of Walmart and Best Buy were still lined with CDs.

Fast forward to 2012 and the story is much different, however. For this year will be the first in history when 50% of all music media sales globally have gone digital. Music went digital when we all stopped watching the iPod and paying far more attention to “apps” and tablets and smartphones.

And, books are now following in the footsteps of music, which of course had a head start. Amazon’s Kindle e-Reader, the iPod-like dominator that truly launched the era of the digital book, didn’t surface for consumers until 2007 – a full six years after the iPod. But, don’t let that lull you into thinking it’ll be a while before books go digital. Thanks to the accelerating pace at which new technologies are being adopted once released, the Kindle and the e-book have caught up mighty quickly.

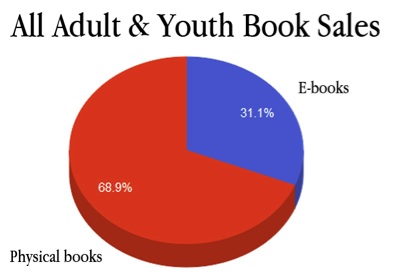

In January 2012, a full 31% of all books other than academic textbooks sold in the US were sold in digital form. That’s all adult fiction, non-fiction, and children’s books. And that was beforepeople started using the tens of millions of tablets, like the iPad and Kindle Fire, and e-Readers such as the revised Kindles and Barnes & Noble’s Nook line of readers, that they gobbled up at stores that very holiday season.

Despite music’s lead, if the growth rate in e-books has been holding through this year, as most surveys of the business indicate is the case, then by this point in 2012, 50% of all new books sales in the US will be digital. That’s just less than half the time it took for the same to happen to music, and ahead of what promises to be another big holiday season for tablets and e-book readers.

Thanks to the Internet, and the new classes of mobile devices, half of all books and music purchases are now done digitally.

Video, however, is the king of media by most measures – most hours per person per week spent consuming it, most revenue by a country mile if you include movies, cable and satellite TV subscriptions, broadcast, all the advertising those bring in, plus physical media. And, video has been a bit slower to see the transition, with only a smidgen of the colossal industry succumbing to the emergence of Internet delivery.

Maybe it’s the fact that video consumes significantly more bandwidth than other media, making it more difficult to download and store large amounts of it. Maybe the tightly controlled licensing of video, which with music’s struggles as a warning to heed has held prices high for longer than other media. Or maybe it’s simply the fact that cable television is already as or more convenient than a laptop or a small mobile phone screen.

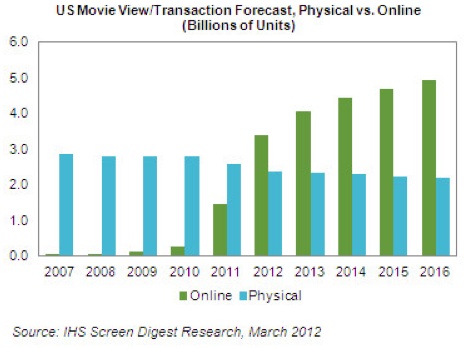

Regardless, cracks are still beginning to form in the most formidable analog revenue stream in the world. In 2012, for the first time ever, more movie views will be consumed online than on physical media like DVDs. That’s a major shift from just five years ago, when it was less than 1/10ththe volume, according to IHS iSuppli.

.

Unfortunately for the makers of content, revenues are not quite following the consumption trend. Not yet. Total streaming media sales in 2012 more than doubled from the previous year, to $992 million. However, over the same period of time, physical media sales dropped 12% to $8.8 billion – a far larger decline of $1.1 billion than the services that are supplanting them. Much of the gap is attributable to the fact that online movie services don’t tend to get the newest and highest demand content (again because licensees control it carefully), but as we remove the need for inventory, store shelf space and the rest of the costs of physical distribution, price compression will become an undeniable trend.

Add this trend to the fact that cable TV subscribers (as a % of households) have fallen for the first time in American history since the introduction of the medium, and you can see why content producers are now increasing licensing rates at a record pace–leading to the costly stand-offs many subscribers are accustomed to seeing, where channels must be blacked out on the backs of failed renegotiations with cable and satellite providers.

The dream of a digital world where all the songs, books, and videos ever produced are virtually at the tips of our fingers is suddenly just about upon us. And, in the process the economics of three very large industries are changing dramatically.

But, just like the smartphone, there is another side to this story that is easy to miss: the middle-classification of the developing world.

Today, there are more smartphones sold each year than there are PCs. The small, power sipping devices with ubiquitous Internet connections are, for a huge swath of the world’s population, the very first and currently only computer they own. For them the smartphone is not a shrunken down desktop computer, it is precisely the model for what a computer is. And, for those consumers, digital downloads will also be there from the beginning to compliment their mobile computers, fueling growth.

How much? According to McKinsey’s latest report on the subject, in China alone, urbanites earning a middle class income will grow by another one hundred million households over the next ten years.

The spread of mobile media is not going to slow anytime soon.

The Rise of the Robot

Robotics is not a new industry; any autoworker can attest to that. The very first robotic welding system was launched in 1964 in a General Motors plant in NJ. Ever since, the public imagination has been fired by visions of a world where robots replaced deadening human labor in every factory and warehouse, and robotic butlers served our every whim.

While nothing quite like either scenario has occurred in the ensuing years, industrial robotics has been growing at a steady pace, and now has become a $12 billion dollar per year industry. Robots provide precision welding at a breakneck pace; they lift and twist and turn remarkably heavy items; and take on other work that would be dangerous if not impossible for people do manually. But, while the industry continues to grow, it does so at an anemic pace, just barely outpacing global GDP growth.

However, there is another half to the market: service robots. Roughly defined, it’s anything not industrial. Or, anything that doesn’t help manufacture something. Instead, service robots help us complete more traditional service tasks, from cleaning to delivery. These helpful robots got their start as multi-armed offspring of their industrial brethren in the medical system, helping perform surgeries too delicate for clumsy human hands alone, like this Da Vinci surgical system from Intuitive Surgical:

The growth rate of medical robotics has been astounding and, as uptake from hospitals has skyrocketed, Intuitive has been one of the most successful stocks of the past decade for investors.

However, these types of human-assisted robotics are still a vestige of an earlier age, where robots were fighting with one arm tied behind their back. Or, rather, they were all arms, but were deaf and blind without even a sense of touch to guide them, making it difficult for them to navigate our complex human world.

That’s now being addressed with a combination of better and cheaper sensors, and a little bit of intelligence. A number of things had to come together to enable robots to take to the ground (and even in some cases, the air):

· Sensors to navigate the world with. Pioneered mostly by the military—for use in spy satellites, fighter planes and night vision goggles– sophisticated sensors now allow robots to avoid falling down stairs or to judge the distance to an object passing through its path, and they have fallen dramatically in price over the years.

· Algorithms to efficiently navigate in a complicated environment. It’s easy enough for everyone except a teenager to figure out how to clean an entire room, but try teaching your laptop how to vacuum. It’s an amazingly hard task for a programmer. Add in people and other moving obstructions you can’t just run into, and it,s taken decades of programming research to teach computers to move in the real world. But we’ve done it.

· The power to get the job done. The same technology enhancing electric cars– even if they aren’t up to par with gasoline, the rate of change will get them there at some point as they are gaining ground steadily – and making it possible to hold a powerful computer in your jeans’ pocket, is now giving robots the power sources they need to enable them to do serious computation and move around the world for hours at a time. Lower power requirements from the increasingly smaller and cheaper components are helping as well.

Those smarts, eyes and ears, and better power sources have all advanced to the point where we can now strap wheels on our robots and get them to do all sorts of things, like the latest, and cheapest yet, robotic lawnmower from Honda. Saturday mornings may never be the same. And, that robotic grass clipper is just one of an arsenal of gutter cleaning, floor mopping, and vacuuming robots now available to save you hours of labor at home.

While you may have seen a few of these home service robots, or may even own one or two of them like I do, the commercial applications are where the autonomous rubber is really meeting the road today.

Heavy industry and government have been snapping up non-manufacturing robots at an alarming pace. Excluding the already very well known Unmanned Aerial Vehicles (UAVs, or drones) that are flying over much of the Middle East and Asia (and parts of the US) as I type, there has been enormous growth in ground robots as well. Like the iRobot packbot. This versatile arm on tank-style treads is being used by the military to disarm IEDs, and by power plants so they don’t send human beings into lethal radiation when there is a problem like Fukushima. Defense contractors like UK-based QinetiQ have followed them into the market with gun toting versions of the concept, ready to replace soldiers on the ground.

The Tug (pictured), from venture-backed startup Aethon, roams the halls of hospitals dispensing medication to patients as needed. It can share the halls safely with doctors and patients alike, works around the clock, and makes no costly mistakes (at least not without a human popping in the wrong instructions). Plus, Tug, who’s been deployed in over 100 hospitals already, doesn’t mind delivering meals or linens as well. Whatever the job calls for.

Unbeknownst to most, this bread of autonomous robots now wander the high seas by the hundreds, employed to check for pollutants, measure tides, or just take videos for above ground observation by landlubber scientists. Even companies like Google and universities like Carnegie Mellon are applying the technology to build self-driving cars.

While much of the promise still lies ahead for this area, the International Federation of Robotics now pegs the Service Robotics market, excluding flying UAVs, at $13 billion annually in gross revenues, vs. only about $600 million in 2000. It now stands as the largest segment of the robotics industry.

Yet the biggest growth is still ahead for the industry, as despite those advances, there is one major impediment still in front of the industry. A roadblock that the wild kingdom conquered long ago, and that still separates us humans from the robots. That is the ability to work in teams.

Pictured below are robots from a company called Kiva Systems:

These robots operate a warehouse exactly the opposite way from how it works today. Instead of people driving around forklifts (maybe even with a few lunchtime beers in them), in order to pick stuff off vast networks of stationary shelves, the shelves drive around and bring the products to the people who load up boxes and fill trucks. In this way, you can reduce the manpower in a warehouse to a small fraction of what is needed today. And do so with laborers who do not qualify for workers comp, vacation at the Jersey shore, or require sleep. Is it any wonder then why Amazon.com bought Kiva out for a few hundred million dollars?

Despite all of these new products and systems, the robotics industry today is still in its infancy. It’s right at that confluence point where all the necessary ingredients converge to allow the industry’s growth to explode, which is exactly what’s happening. We’re a lot closer to the world of the Jetsons, with robot maids, dog walkers, factory workers, and even auto-pilot cars, than I think many imagined would happen by now. The progress is astounding and really just beginning.

Of course, one does have to start wondering what happens when the smart robots get their hands on this next technology. Will they even need us to make them anymore?

I’m kidding … sort of.

An Additive Revolution

Traditional manufacturing is a messy business. There are basically two ways to mass manufacture any given object, and both have considerable economic weak spots.

The first and oldest is the subtractive method. Take a core object and whittle it down to the desired shape. All sorts of manufacturing techniques work like this, from the literal whittling of a piece of wood, to the modern lathe or popular computer-controlled “CNC” routers that fashion much of our modern furniture and more. While effective, there are severe limits on the shapes and structures of individual objects that can be made this way. And it produces large amounts of waste that cannot always be easily reused.

The other arrived with the age of metallurgy: the mold. Refined from what was once a very inexact science, today metal and plastic objects can be cast to fit a master mold of very high precision. However, unlike with subtractive manufacturing, every object cast will be an exact copy of the original used to make the mold. Sometimes that is highly desirable. But not always, as when you want a product customized. In addition, similar limits on the output constrain what can be done in a single step, and thus many parts often must be cast and assembled later, even when made from the same material. Plus, the cost of making molds can be prohibitively expensive when you require a large number of parts.

However, with the rise of the computer in the 1980s, another form of manufacturing was conceived and began to take hold: 3D printing.

The idea is simple. Take a powder, like the ink in an inkjet printer. Spray down a thin layer, then use heat or chemicals to bond the powder together into a solid object. Then layer on some more and repeat until you slowly, one layer at a time, build up a 3-dimensional object. Hence the term additive manufacturing. Control the entire process by computer, and you can fuse layers together to build almost anything.

The premise may be simple, but the implications are far-reaching. Unlike traditional subtractive manufacturing, there is little to no waste in the process. And, thanks to the generic nature of the machines, able to control the layers one at a time from a computerized model of the object to be built, one object can be created at a time with customization, much like with a router or lathe – but far more flexible than mold-based processes.

A single copy of a thousand different objects, or a thousand copies of a single one, can be created with no change in the economies of doing so, just as a home printer can print a letter one moment and a photo the next.

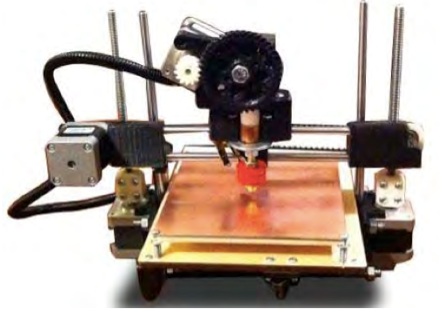

Now, machines like this one from 3D printing conglomerate Stratasys are showing up on shop floors everywhere from the aerospace to automotive industries, and from architectural offices to high school classrooms:

Despite its genesis in the 1980s, the technology has taken decades to perfect. Once only able to work on brittle types of plastic, systems have now been created to work with a wide variety of practical materials like flexible plastics, or steel and aluminum, all the way to the emerging ideas of biological materials or even… chocolate. Printed biomatter is still a research experiment, unfortunately. But the other applications have taken off like bottle rockets in recent years.

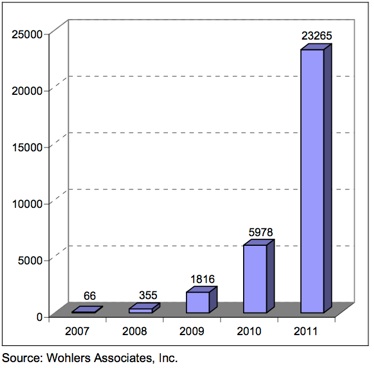

Sales of industrial AM systems in recent years have experienced a growth rate of over 35,000%:

User cases like BMW’s underscore the importance of the technology. During the design phase of a new or improved vehicle, BMW will go through many iterations of a part to get it exactly right. Previously, they used CNC routers to create prototype fixtures for the car. However, when they moved to using a printer from Stratasys – one of the largest 3D printer manufacturers in the world – BMW was able to reduce the cost of each test part by 58%. Better than that, their wait time for a newly designed part fell 92%. That’s an astounding change to the financials of the process, and a huge leap forward in terms of their ability to bring a design to life quickly.

Sales are being propelled by a rapid increase in the speed and precision of the machines, as well as the aforementioned explosion in available materials. They are moving forward so fast that researchers at Vienna University of Technology have recently set a world record for both size and speed using a “selective laser sintering” style machine. They built a scale model of an F1 car that is only a fraction the width of a human hair, just a few nanometers in size. And, they did so at a rate equivalent to 5 meters of material per second – a blistering pace by any manufacturing standard.

While this type of work still requires highly precise machines with steep price tags, the prices are dropping rapidly. And, that is thanks in large part to the group of people who did the same for the personal computer: hobbyists.

3D printers are nearly as numerous in the personal world as the industrial one. To date, it is estimated that more than 50,000 personal 3D printers have been purchased or built. Open Source projects like the RepRap, a partially self-replicating printer (it can print a number of its own parts), and a fervent community referred to as Makers are driving down prices rapidly. According to market researchers Wohlers Associates:

“Printrbot is another example of a RepRap derivative. The goal of Printrbot’s Brook Drumm was to raise $25,000 at Kickstarter, which was met in December 2011. Drumm went on to receive pledges of $830,827 from 1,808 backers. The small machine sells as a kit for $549–699.”

3D printing is a remarkable emerging industry, and at approximately $500 million dollars annual revenue in size today, has a great future ahead of it, for both consumers and investors. The technology is just now making the turn up that hockey stick-like growth curve, and over the long run may have as much effect on manufacturing as the PC had on knowledge work – an economic revolution of immense proportions.

The Biological Renaissance

The biggest unfulfilled promise of all has been from medicine. Wired magazine and Popular Science have filled our heads for decades with images of microscopic robots flooding through our cells, scrubbing plaque from our arteries, eating tumors, helping boost our immune systems. There were to be pure genetic cures for nearly every disease imaginable, from Alzheimers to cancer.

How many of these scenarios have come to pass? Very few, as we can all see. But life-changing medicine is close. Biotechnology is only beginning to arrive at a point of confluence, thanks to: the rapid acceleration of computing power in the last decade, the tools and techniques that were pioneered in the research boom following the completion of the human genome project, and the advances in working at the nanometer (i.e. sub-cellular) level made by the semiconductor industry over the past two decades.

The original human genome project was like the invention of the lever or the wheel. A remarkable accomplishment in its own right, but more importantly a toolset that would come to be used for all sorts of ends, constantly refined to be both better and cheaper over time.

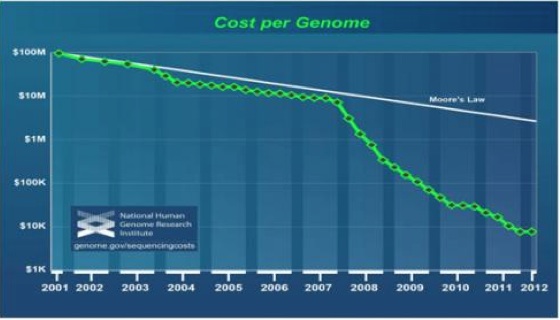

That first genome sequencing took 13 years to accomplish, at a gross cost of over $3 billion. Come 2012, the cost of sequencing a new complete human genome has fallen to just $10,000, and takes about one day. That is an increase in speed and decrease in cost that would make even Gordon Moore blush. And, that is not the end of the advance. Genetic sequencing is well on its way to $1000 or less, according to most biotech industry analysts, while machines with superfast, sequencing-specific chips will reduce basic decoding to a matter of hours in the near future.

Today, more than 50,000 complete human genomes have been recorded for medical study. That number will double again by next year. And, literally billions of individual genetic tests are now being performed each year.

All this investment into understanding our genes, and the rest of our biology, has begotten the rise of numerous new industries that were merely speculations in the scientific papers of a decade ago.

Three prime examples:

2012 marked the first commercial sales of an anti-body drug conjugate for use in humans.

In the early 1980s, the study of “monoclonal antibodies” – our bodies’ own immune systems’ master keys that can unlock the doors of virtually any complex cell – was just coming into the fashion. Even at that time, the potential of so called anti-body drug conjugates was becoming obvious to scientists. One of the more pressing problems facing the designers of modern pharmaceuticals, and especially biological drugs, has been how to defeat the incredible defense systems our cells have erected to prevent contamination and infection. But antibodies are designed to do exactly that, and in a highly targeted fashion, finding only the cells they fit and promoting intra-cell treatments without needing a massive engineering project to do so. Harnessing that unique property, and using it to deliver anything from chemical agents to DNA-targeting drugs, has long been a goal of scientists keen to open up all sorts of new therapeutic possibilities.

Washington-based Seattle Genetics, whose work to encapsulate chemotherapy agents into monoclonal anti-bodies won approval from the FDA in late 2011 for the treatment of Hodgkin lymphoma and anaplastic large-cell lymphoma, has been pioneering this space. Their future planned products include a version of the same formula that targets renal cell carcinoma, pancreatic, ovarian and lung cancer, as well as multiple myeloma and several types of non-Hodgkin lymphoma. The continued success of anti-body drug conjugates could mean a major leap forward in our ability to attack diseases that have previously proven nearly impossible to treat effectively.

2012 marks the culmination of well over a decade of research into pathway inhibitors with the marketing of the first anti-cancer drug of its kind.

Another major source of focus in cancer is moving beyond the traditional “slash, burn and poison” regimen of surgery, radiation, and chemo, and into a host of new therapies that aim straight at the biological roots of cancer. One such approach has been to target the replication ‘pathways’ that enable cancer to grow unabated in our bodies. By interrupting these core growth factors, cancer’s progress can be slowed or halted, allowing doctors more time to effectively treat tumors. This small molecule technology targets directly at the biological mechanisms that make cancer tick, and has the potential to eventually change the way we treat cancer altogether.

Small outfit Curis, Inc. has been at the forefront of developing pathway inhibitors. Partnered with Genentech, the companies won approval for Everidge in January 2012. This remarkable new drug treats Basal Cell Carcinoma, an often disfiguring type of skin cancer that has previously resisted treatment. While there is still a long path ahead for the technology, it is one of the most promising new types of cancer therapy now becoming available to doctors and patients, because of the rapid increase in biological research capabilities.

But, of course, the true holy grail of medicine has been, since the days of Watson and Crick, direct genetic treatments.. After all, 2 out of 3 people will die from conditions that have been shown to have strong genetic roots – cancer, heart disease, and diabetes among them.

Thus far, progress along this line has been slow. Despite the rapid advances in the cost and speed of genetic sequencing, and the huge public and private investments into genetic medicine, little has changed since the 1990s when permanent genetic modification experiments were all but halted due to disastrous results.

In order to move genetic medicine forward, we needed a working paradigm to even approach the problem. Our genes are incredibly complex bits of code, which function in ways that are still imperfectly comprehended. But, as our understanding has increased, so have our methods for manipulating these processes. That is why in 2006 the Nobel Prize in medicine went to the inventors of a technology called antisense, or more specifically, RNA interference. Antisense technologies turn off the expression of particular genes in a way that is predictable and temporary, promising the ability to treat patients with simple injections or even pills for genetically-rooted disorders. The potential of gene silencing drugs is beyond compare in biology today. So, how far along is this technology?

2012 will also mark the first time an antisense drug – a temporary off switch for gene expression – has been approved for wide use.

Kynamro, an antisense drug from Isis pharmaceuticals and Genzyme, targets patients with familial hypercholesterolemia: those patients whose high cholesterol is linked to the production of a protein dubbed apo-B for short. The result is a significant decrease in production of LDL (i.e. bad) cholesterol for a subgroup of patients who have been otherwise resistant to more traditional treatments like statins. The FDA is now considering Kynamro, with a positive decision expected by January of 2013. Regulatory approval is pending in Europe, as well.

2012 also featured major advances in the treatment of countless other diseases, from hepatitis-C to lupus to leukemia, both in the lab and through trial approvals. The rate of advancement in biotechnology is advancing at a pace as rapid as that showcased by the falling price of genetic sequencing. Entirely new markets like biological molecular testing are emerging to make advances not just in treatment, but in diagnosing of diseases.

While sectors like robotics were founded well back in the 1960s, with researching dating back well before that, the biological revolution only really got underway once we: were able to reliably work at the nanometer level; had the computer power to deeply analyze and store millions of chemical sequences; developed specialized silicon and fluidic systems tailored to analyzing biological compounds; and invented tools to study and understand and manipulate the complex structures that underlie life itself.

The era of biological medicine, just like robotics, additive manufacturing, and digital content, is now upon us. Like any technological revolution, it will take decades fully to develop. Yet as it rounds into form, the $750 billion per annum drug industry, and our lives, will never look quite the same again.

Alex Daley is chief technology investment strategist at Casey Research, a leader in subscriber-supported investment research. He has spent his career working with emerging technologies, collaborating with scientists and entrepreneurs as an angel investor, as a consultant to venture capitalists, and as a member of the famed Bill Gates research team at Microsoft. Today, he and his team work to identify the most promising up and coming public companies in areas such as software, Internet services, semiconductors, and biotechnology.

What's been said:

Discussions found on the web: